Key Takeaways

- Identity and access management (IAM) has traditionally relied on static rules and manual processes that struggle to keep pace with the dynamic nature of modern threats, leading to many organizations perpetually reacting to incidents rather than preventing them.

- AI—particularly machine learning—is changing this paradigm by introducing adaptive, intelligent security that learns and evolves alongside threats.

- Data suggest that most organizations understand the role AI and machine learning tools can play in mitigating security threats and that many organizations are already leveraging AI and machine learning to proactively identify compromised credentials, spot vulnerabilities, and detect anomalous access patterns.

- The most successful organizations understand the strengths of AI as well as its limitations, and they are strategic about finding the right balance between the technology and human oversight to ensure the most effective cybersecurity and business identity theft protection for their businesses.

- Taking a crawl, walk, run approach to integrating AI into security strategies can help organizations navigate implementation challenges and dramatically reduce their risk exposure.

Leveraging AI in the identity credential battle:

The good, the bad, and the checkmate.

It’s no secret that the various forms of AI have supercharged cybercriminal capabilities. Indeed, findings from our 2025 State of Cybersecurity Preparedness research show that more than three-quarters (77%) of organizations have high awareness of AI′s role in emerging identity-based attacks.

They understand that AI makes it much easier for bad actors to get into the game, decreasing the required level of computer competency involved in writing scripts and scanning for vulnerabilities. At the same time, IT leaders respect the fact that AI amplifies the scale and sophistication of identity-based attacks, empowering attackers to continually grow more dynamic and creative in their approaches. As just one example, poorly implemented AI increases the potential for data leakage and data poisoning, empowering bad actors to successfully pull off feats such as jailbreaking from one database into another to acquire privileged information or to inject or tamper with sensitive data sets.

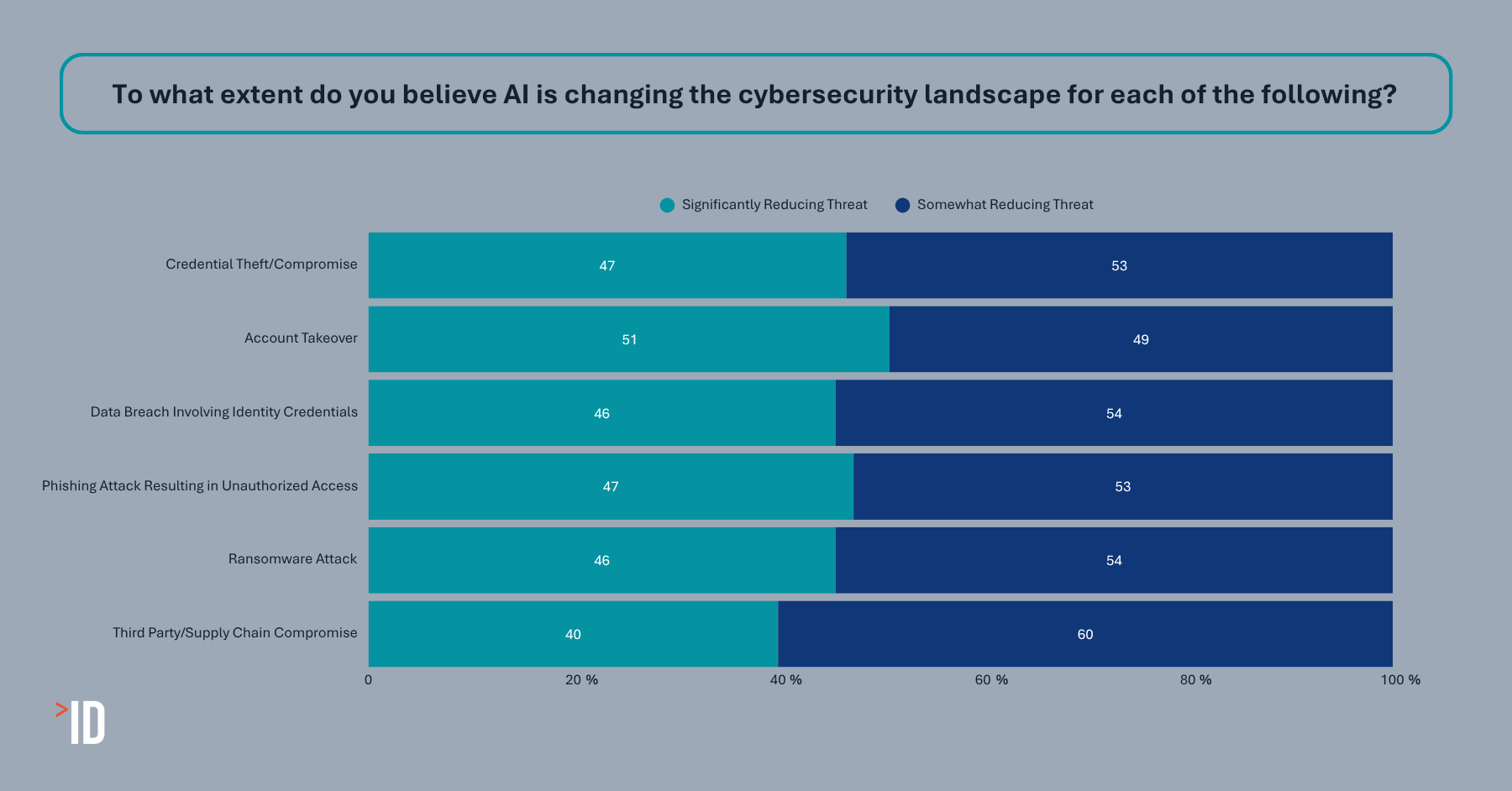

While the possibilities to do more damage more quickly seem endless, the good news is that the same technology that enables black hats can also be leveraged by white hats—and our data indicate that’s exactly what’s happening in organizations across various industries. Companies appear just as aware of the positive side of AI as the negative, with a majority of the IT leaders we surveyed agreeing that AI is at least marginally reducing various identity credential security threats, including credential theft, account takeover, and data breaches involving identity credentials. Nearly a quarter of leaders give even greater credence to the upside of AI, signaling that technological advancements are resulting in a significant impact on threat reduction.

Even more promising, nearly all companies are proactively leveraging AI or machine learning tools to fortify their defenses against identity credential theft and cyberattacks. Companies are most likely to be using AI to look for signs of compromised credentials, vulnerabilities within their own networks, or suspicious access attempts.

In today’s landscape, where AI can do as much good as it can harm, one thing is clear: AI holds the keys to mitigating emerging risks, including the ones AI itself is introducing. Companies wishing to outpace attackers and come out ahead in the perpetual game of cat and mouse will need to stay vigilant about exploring the continuously evolving capabilities of AI and integrating the most effective solutions into their security platforms.

Investing in security that keeps getting smarter:

Machine learning as the backbone of next-generation identity security systems.

While generative AI is causing much of the hype these days, it’s an older subset of Artificial Intelligence—machine learning—which has been around longer with more established results, that’s doing the heavy lifting when it comes to helping companies gain an advantage against their attackers. Machine learning technologies excel at finding patterns in vast amounts of data, and they continuously learn from network behavior, adapting protection mechanisms in real-time to address emerging threats. This is precisely what’s needed to identify suspicious behavior among millions of daily access events—far more than human analysts can meaningfully review—and to transform overwhelming data volumes into actionable insights that keep organizations and their information safe.

Machine learning technologies are especially well suited to security measures, including:

Anomaly detection:

Traditional security systems operate on if-then logic: if a user attempts to access a system outside working hours, then flag the attempt. While effective against basic attacks, sophisticated adversaries can easily bypass such predictable defenses.

Machine learning anomaly detection goes beyond rule-based security to analyze historical access patterns across multiple dimensions—time, location, device characteristics, resource access, typing patterns, and more—and establish behavioral baselines for each identity. When activity deviates significantly from these baselines, the system can flag potential compromises, even if the activity doesn’t violate any predefined rules.

For example, an executive who typically accesses financial reports from her office computer during business hours suddenly downloading customer databases at 3 AM from an unrecognized device in another country would trigger immediate alerts, even without explicit rules forbidding such access.

Classifying threats:

While anomaly detection excels at finding unusual patterns, supervised learning models help security teams classify and prioritize threats. These models train on labeled datasets of known attack patterns—password spraying, credential stuffing, or privilege escalation attempts—allowing them to recognize similar attacks in the future.

The power of supervised learning lies in its ability to generalize data. After training on examples of account takeover attempts, the system can identify new variants of the same attack technique, even if the specific implementation differs from previous incidents.

Continuous authentication:

Traditional authentication happens at a single point in time—when a user logs in—creating a significant vulnerability window if credentials are compromised after initial authentication.

In contrast, AI-powered continuous authentication systems constantly evaluate the probability that the current user is legitimate. By analyzing behavioral biometrics—keystroke dynamics, mouse movements, touchscreen pressure, scrolling patterns—these systems maintain an ongoing trust score. If suspicious behavior emerges, systems can automatically require step-up authentication or restrict access privileges until identity is reconfirmed.

Automating dormant account cleanup:

Orphaned identities represent a major security gap for organizations. By analyzing behavior and using linked data from other systems, such as HR platforms, AI models can help look for users who have left the organization but still have active accounts. The models can also detect and disable service accounts without a clear owner or purpose or any account that goes unused for an extended period of time, helping companies eliminate a significant vulnerability and access point for bad actors.

Correcting excessive permissions:

According to a 2025 Ponemon-Sullivan Privacy Report, 34% of third-party and 45% of internal users have more access to systems and data than they need to do their jobs. While a recent SailPoint white paper shows that traditional periodic access reviews (PARs) can result in a 20–40% typical entitlement reduction— up to 50–60% in some cases—after the first cycle of access certifications, such reviews are time consuming and remain heavily reliant on humans. AI models can perform intelligent access reviews in a fraction of the time with much greater accuracy and can provide previously unavailable insights. These reviews flag issues such as deviations from least-privilege accounts, outlier permissions compared to similar roles, and high-risk access combinations requiring human review. Such models streamline access governance systems and can recommend privilege reductions based on actual usage patterns. The result is significantly reduced exposure without the administrative burden of manual reviews.

AI tools can further improve access rights by enabling context-aware authorization. This capability dynamically adjusts access privileges based on risk factors such as location and network trustworthiness, device security posture, times of access, current threat intelligence, previous activity partners, and sensitivity of requested reprocures. For example, a user might have full access to sensitive customer data when working from a corporate office on a managed device during business hours. The same user accessing from a public Wi-Fi network on a personal device at midnight might receive read-only access or require additional authentication steps.

Enabling predictive and proactive security:

Rather than waiting for attacks to occur, AI enables a predictive security posture where companies can forecast potential vulnerabilities before they’re exploited, identify likely attack paths, and prioritize remediation efforts. Security teams can proactively hunt for threats, identify subtle connections between seemingly unrelated events, and surface attack campaigns that might otherwise remain hidden. Just as important, they can use risk scoring to prioritize the greatest threats and accelerate investigations, improving efficiency, reducing response time, and helping to prevent analyst burnout.

Automating defenses and addressing systemic vulnerabilities:

When security incidents occur or anomalous access is detected, AI offers the ability to shut down ports or IP addresses automatically, helping companies limit the damage, and proactively preventing connections with these high-risk access points going forward. AI also accelerates root cause analysis by automatically reconstructing event timelines and identifying the origin of breaches, giving organizations further information to improve their security posture. Ultimately, by recognizing patterns across multiple incidents, AI enables organizations to address systemic vulnerabilities rather than treating each incident in isolation.

Striking the right balance between AI and human oversight:

Why people are a critical part of the equation.

It’s easy to get caught up in the AI hype, and modern cybersecurity approaches certainly do demand a healthy understanding of what AI can do and how to optimize it. But AI can’t do everything. The key to success is to realize what’s possible, what’s not, and prioritize the allocation of roles and responsibilities accordingly.

Generally, while AI excels at pattern recognition and processing vast data volumes, human security professionals provide the crucial context, judgment, and decision-making in ambiguous situations that leads to world-class security. Successful organizations typically follow a crawl-walk-run approach, as described below, with integrating AI into any aspect of the business, and cybersecurity and business identity theft protection are no exception.

Crawl:

AI provides recommendations that humans review and approve.

Walk:

AI handles routine cases autonomously while escalating edge cases.

Run:

AI manages most security operations with human oversight of significant decisions and continuous system improvement.

Ensuring a successful implementation:

The best approach starts now.

As attackers increasingly leverage AI to enhance their capabilities, defensive AI is a necessity for maintaining effective identity security. So, the question is no longer whether to adopt AI for identity security, but how quickly and effectively organizations can integrate these capabilities into their security strategies.

Of course, companies will face hurdles, some of which will work themselves out as the technologies continue to improve, and companies improve the quality and quantity of their own data. For example, current machine learning models often function as “black boxes,” making it difficult to understand why they flag specific activities as suspicious. Looking ahead, next-generation explainable AI systems will provide clear rationales for security decisions, enhancing trust and enabling more effective human-AI collaboration. In the meantime, companies can focus on higher quality data input to improve the accuracy and recall of tools.

Another limitation specific to small and midsized companies is the lack of resources to facilitate supervised and unsupervised machine learning, which require curating millions of rows of labeled and unlabeled data. Smaller organizations can still benefit from AI models through federated learning, which allows multiple organizations to collectively train AI security models without sharing sensitive information.

As organizations find ways to overcome adoption hurdles and to successfully and appropriately implement AI technologies in their security protocols, they gain a critical advantage in the ongoing cybersecurity arms race—one they can continue building upon to reduce risk exposure while enabling business agility to ultimately thrive in today’s digital environment.